Compile-time vs. compile-time

- By H. S. Teoh, March 2017

One of D's oft-touted features is its awesome compile-time capabilities, which open up wonderful meta-programming opportunities, code-generation techniques, compile-time introspection, DSLs that are transformed into code at compile-time and therefore incur zero runtime overhead, and plenty more. Acronyms like CTFE have become common parlance amongst D circles.

However, said "compile-time" capabilities are also often the source of much confusion and misunderstanding, especially on the part of newcomers to D, often taking the form of questions posted to the discussion forum by frustrated users such as: "Why doesn't the compiler let me do this?!", "Why doesn't this do what I think it should do?", "Why can't the compiler figure this simple thing out?! The compiler is so stupid!", and so on.

This article hopes to clear up most of these misunderstandings by explaining just what exactly D's "compile-time" capabilities are, give a brief overview of how it works, and thereby hopefully give newcomers to D a better handle on what exactly is possible, and what to do when you run into a snag.

Contents

There's compile-time, and then there's compile-time

Part of the confusion is no thanks to the overloaded term "compile-time". It sounds straightforward enough -- "compile-time" is simply the time when the compiler does whatever it does when it performs its black magic of transforming human-written D code into machine-readable executables. Therefore, if feature X is a "compile-time" feature, and feature Y is another "compile-time" feature, then X and Y ought to be usable in any combination, right? Since, after all, it all happens at "compile-time", so surely the compiler, with its access to black magic, should be able to just sort it all out, no problem.

The reality, of course, is a bit more involved than this. There are, roughly speaking, actually at least two distinct categories of D features that are commonly labelled "compile-time":

- Template expansion, or abstract syntax tree (AST) manipulation; and

- Compile-time function evaluation (CTFE).

While these two take place at "compile-time", they represent distinct phases in the process of compilation, and understanding this distinction is the key to understanding how D's "compile-time" features work.

(The D compiler, of course, has more distinct phases of compilation than these two, but for our purposes, we don't have to worry about the other phases.)

Template expansion / AST manipulation

One of the first things the compiler does when it compiles your code, is to transform the text of the code into what is commonly known as the Abstract Syntax Tree (AST).

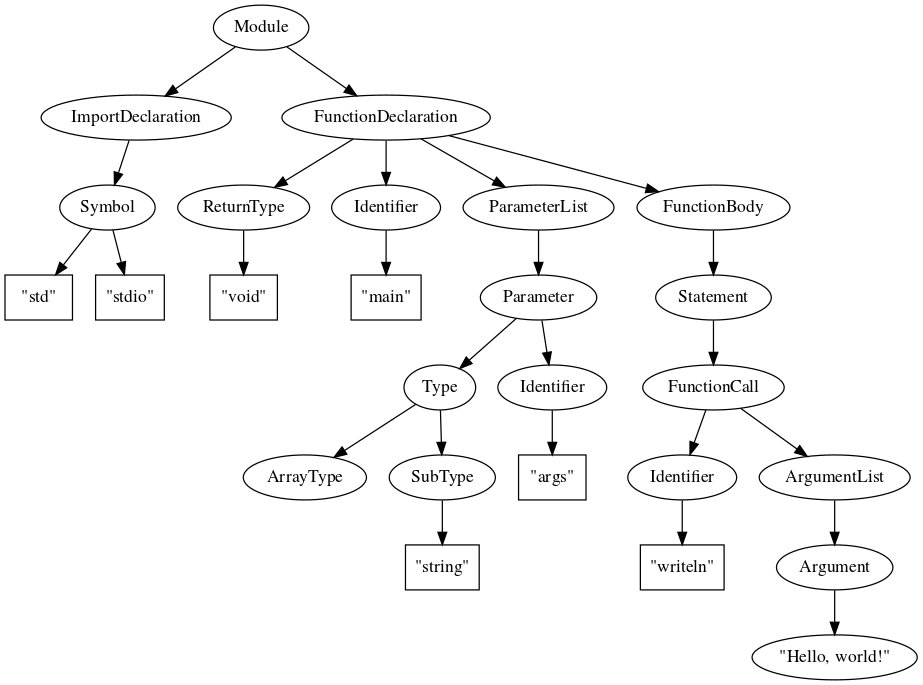

For example, this program:

import std.stdio;

void main(string[] args)

{

writeln("Hello, world!");

}

is parsed into something resembling this:

(Note: this is not the actual AST created by the compiler; it is only a simplified example. The actual AST created by the compiler would be more detailed and have more information stored in each node.)

The AST represents the structure of the program as seen by the compiler, and contains everything the compiler needs to eventually transform the program into executable machine code.

One key point to note here is that in this AST, there are no such things as variables, memory, or input and output. At this stage of compilation, the compiler has only gone as far as building a model of the program structure. In this structure, we have identifiers like args and writeln, but the compiler has not yet attached semantic meanings to them yet. That will be done in a later stage of compilation.

Part of D's powerful "compile-time" capabilities stem from the ability to manipulate this AST (to some extent) as the program is being compiled. Among the features that D offers are templates and static if.

Templates

- If you are already familiar with the basics of templates, you may want to skip to the following section.

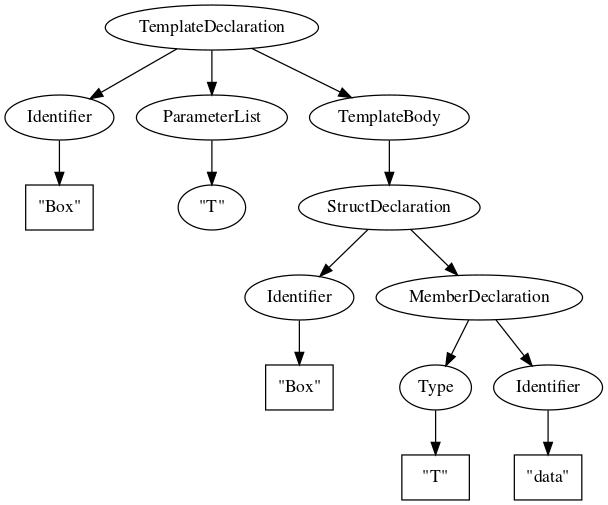

One of D's powerful features is templates, which are similar to C++ templates. Templates can be thought of as code stencils, or stencils of a subtree of the AST, that can be used to generate AST subtrees. For example, consider the following template struct:

struct Box(T)

{

T data;

}

In D, this is shorthand for:

template Box(T)

{

struct Box

{

T data;

}

}

Its corresponding AST tree looks something like this:

When you instantiate the template with a declaration like:

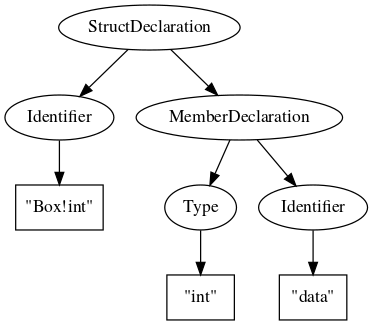

Box!int intBox;

for example, what the compiler effectively does is to make a copy of the AST subtree under the TemplateBody node and substitute int for every occurrence of T in it. So it is as if the compiler inserted this generated AST subtree into the program's AST at this point:

Which corresponds to this code fragment:

struct Box!int

{

int data;

}

(Note that you cannot actually write this in your source code; the name Box!int is reserved for the template expansion process and cannot be directly defined by user code.)

Similarly, if you instantiate the same template with a different declaration, such as:

Box!float intBox;

it is as if you had declared something like this:

struct Box!float

{

int data;

}

Effectively, you are creating "virtual AST subtrees" every time you instantiate a template, which get grafted into your program's AST when the template is instantiated. This feature is great for avoiding boilerplate code: you can factor out the common bits of code into a template, and thereby adhere to the DRY (Don't Repeat Yourself) principle.

static if

D templates are only the beginning of what D is capable of doing. Another very powerful tool in the AST manipulation phase of D compilation is static if. For example:

struct S(bool b)

{

static if (b)

int x;

else

float y;

}

The static if here means that the boolean parameter b is evaluated when the compiler is expanding the template S. The value of must be known at the time the template is being expanded. In D circles, we often say that the value must be known "at compile-time", but it is very important to more precise. We will elaborate on this more later.

If the value is true, then the else branch of the static if is pruned away from the expanded template. That is, when you write:

S!true s;

it is as if you declared:

struct S!true

{

int x;

}

Note that the else branch is completely absent from the expanded template. This is a very important point.

Similarly, when you write:

S!false t;

it is as if you had declared:

struct S!false

{

float y;

}

Note that the if branch is completely absent from the expanded template. This is also a very important point.

In other words, static if is a choice that affects the effective AST seen by later compilation phases.

To drive this point home further: at this stage, when static if is evaluated, notions like variables, memory, and I/O don't exist yet. We are manipulating the structure of the program; not its execution. In the above code, x and y are merely identifiers, not variables or member fields of a struct. Their semantic meaning is not assigned until subsequent stages of compilation.

Why is this point so important? Because it relates to a common misunderstanding of D's "compile-time" capabilities as it concerns CTFE, or Compile-Time Function Evaluation. Let's talk about that next.

CTFE

CTFE stands for Compile-Time Function Evaluation. This is an extremely powerful feature that D offers, and is similar to C++'s constexpr feature (though in the author's opinion far more powerful).

The first and most important thing to understand about CTFE is that it happens after the AST manipulation phase has been completed. More precisely, it happens when the compiler has "finalized" the effective AST of that part of the program, and is now ready to assign semantic meaning to the various constructs represented by the AST. Identifiers are assigned meanings as modules, functions, function arguments, variables, and so on, and other semantic analyses such as VRP (Value Range Propagation) are performed.

Constant-folding, glorified

Part of this semantic analysis is constant-folding. For example, if we wrote something like this:

int i = 3*(5 + 7);

it would be a waste of computational resources to perform the indicated calculation (add 5 to 7, multiply the result by 3) at runtime, because all the arguments of the calculation are constants known to the compiler, and the result will never change at runtime. Of course, this particular example is trivial, but imagine if this line of code were inside a busy inner loop in a performance-critical part of the program. If we folded this constant expression into its resulting value, the program would run faster, since it wouldn't have to repeat this same calculation over and over, and indeed, it wouldn't even need to perform the calculation at all, since the answer is already known by the compiler.

The D compiler detects these constant expressions and replaces them with their value: it is as if we wrote:

int i = 36;

This process is called constant-folding, and is implemented by basically all modern compilers of any language today. So it is nothing to get excited about. The D compiler, however, takes this game to a whole new level. Consider, for example, this code:

int f(int x)

{

return x + 1;

}

int i = 3*(5 + f(6));

Again, if we perform the calculation manually, we see that the value of the expression is 36. However, this time, the constants involved in the expression are masked by the function call to f. But since the definition of f is visible to the compiler, and all it does is to add a constant value 1 to its argument, the compiler should be able to deduce that, again, the expression is constant and does not need to be performed at runtime.

But what if f was more complicated than merely adding a constant to its argument, for example:

int f(int x)

{

int a = 0;

for (int i=1; i <= x/2; i++)

{

a += i;

}

return a + 1;

}

int i = 3*(5 + f(6));

Again, the value of f(6) is constant, but in order to know its value, the compiler has to effectively run this function during compilation. And in order to do that, the compiler essentially compiles the body of f into a state where it can be run inside what amounts to a D virtual machine inside the compiler.

This, in a nutshell, is how CTFE came about, in D's history. There is a limit as to how much this D virtual machine can do, but there's an ongoing trend of expanding its capabilities as far as possible. As of this writing, the D compiler is able to execute significant chunks of the Phobos standard library during compilation, thus making many library features accessible during compilation without needing to implement them the second time inside the compiler. Furthermore, a focused effort is being spearheaded by Stephen Koch to replace the current CTFE engine with an even more powerful one based on a bytecode interpreter, that promises superior CTFE performance and better memory management, and eventually, more features.

A different "compile-time"

Coming back to the topic at hand, though, notice that when we speak of CTFE, we speak of "virtual machines" and "bytecode interpreters". This implies that by this point, the code has gone far enough through the compilation process that it is essentially ready to be turned into runtime executable code.

In particular, this means that it has long passed the AST manipulation stage. Which in turn implies that code that can be evaluated by CTFE can no longer make use of AST manipulation constructs like static if. This is because in order for CTFE to work, things such as variables and memory must exist, otherwise there is nothing to execute or interpret. But in the AST manipulation phase, notions such as variables and memory don't exist yet -- we're still manipulating the structure of the program.

Thus, even though CTFE happens at "compile-time" just as AST manipulation happens at "compile-time", this is actually a different "compile-time". This is why the terminology "compile-time" is confusing, because it gives the false impression that all of these features, AST manipulation and CTFE alike, are lumped together into a single, amorphous blob of time labelled "compile-time", and that the compiler can somehow make it all magically just work, by fiat.

So, to summarize: D's "compile-time" capabilities can be grouped into two distinct phases, AST manipulation and CTFE, and AST manipulation happens before CTFE. We may denote this by a unidirection arrow between the two phases:

- AST manipulation → CTFE

meaning that code can only progress one way, from AST manipulation to CTFE, not the other way round.

Case Studies

Let's take a look at a few common pitfalls that D learners often run into, and see how this principle applies: