Difference between revisions of "Compile-time vs. compile-time"

m (Quickfur moved page User:Quickfur/Compile-time vs. compile-time to Compile-time vs. compile-time: It's been 5 years, I think the article should be ready by now :)) |

|||

| (76 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | :''By H. S. Teoh, March 2017'' | |

| − | One of D's oft-touted features is its awesome compile-time capabilities, | + | One of D's oft-touted features is its awesome "compile-time" capabilities. However, said capabilities are also often the source of much confusion and misunderstanding, especially on the part of newcomers to D, often taking the form of questions posted to the discussion forum by frustrated users such as the classic: "Why can't the compiler read this value at compile-time, since it's clearly known at compile-time?!" |

| − | + | This article hopes to clear up most of these misunderstandings by explaining just what D's "compile-time" capabilities are, how it works, and how to solve some commonly-encountered problems. | |

| − | |||

| − | This article hopes to clear up most of these misunderstandings by explaining just what | ||

==There's compile-time, and then there's compile-time== | ==There's compile-time, and then there's compile-time== | ||

| − | Part of the confusion is no thanks to the overloaded term "compile-time". It sounds straightforward enough -- "compile-time" is | + | Part of the confusion is no thanks to the overloaded term "compile-time". It sounds straightforward enough -- "compile-time" is just the time when the compiler performs its black magic of transforming human-written D code into machine-readable executables. So, the reasoning goes, if feature X is a "compile-time" feature, and feature Y is another "compile-time" feature, then surely X and Y ought to be usable in any combination, right? Since, after all, it all happens at "compile-time", and the compiler ought to be able to sort it all out magically. |

| − | The reality, of course, is a ''bit'' more involved than this. There are | + | The reality, of course, is a ''bit'' more involved than this. There are, roughly speaking, at least ''two'' distinct categories of D features that are commonly labelled "compile-time": |

* Template expansion, or abstract syntax tree (AST) manipulation; and | * Template expansion, or abstract syntax tree (AST) manipulation; and | ||

* Compile-time function evaluation (CTFE). | * Compile-time function evaluation (CTFE). | ||

| + | |||

| + | While these two take place at "compile-time", they represent distinct phases in the process of compilation, and understanding this distinction is the key to understanding how D's "compile-time" features work. | ||

| + | |||

| + | In particular, the AST manipulation features apply to an early stage in the process of compilation, whereas CTFE applies to a much later stage. To understand this, it is helpful to know roughly the stages of compilation that a piece of code goes through: | ||

| + | |||

| + | # Lexical analysis and parsing, where the compiler scans the human-written text of the program and turns it into a syntax tree representing the structure of the code; | ||

| + | # AST manipulation, where templates are expanded and other AST manipulation features are applied; | ||

| + | # Semantic analysis, where meaning is assigned to various AST features, such as associating an identifier with a data type, another identifier with a function, and another identifier with a variable. | ||

| + | # Code generation, where the semantically-analyzed code is used to emit the machine code that goes into the executable. | ||

| + | |||

| + | CTFE sits somewhere between semantic analysis and code generation, and basically involves running D code inside an interpreter (called the CTFE engine) embedded inside the compiler. | ||

| + | |||

| + | Since CTFE can only take place after semantic analysis has been performed, it no longer has access to AST manipulation features. Similarly, at the AST manipulation stage, no semantics have been attached to various structures in the code yet, and so "compile-time" features that manipulate the AST can't access things inside the CTFE engine. | ||

| + | |||

| + | Thus, as far as D's "compile-time" features are concerned, there are really ''two'' different "compile-times"; an early phase involving manipulating the program's AST, and a late phase involving CTFE. Confusing these two is the source of most of the problems encountered when using D's "compile-time" features. | ||

| + | |||

| + | These two phases interact in more complex ways than it might appear at first, though. In order to understand how this works, we need to look at each of these two categories of "compile-time" features in more detail. | ||

| + | |||

| + | ==Template expansion / AST manipulation== | ||

| + | |||

| + | One of the first things the compiler does when it compiles your code, is to transform the text of the code into what is commonly known as the Abstract Syntax Tree (AST). | ||

| + | |||

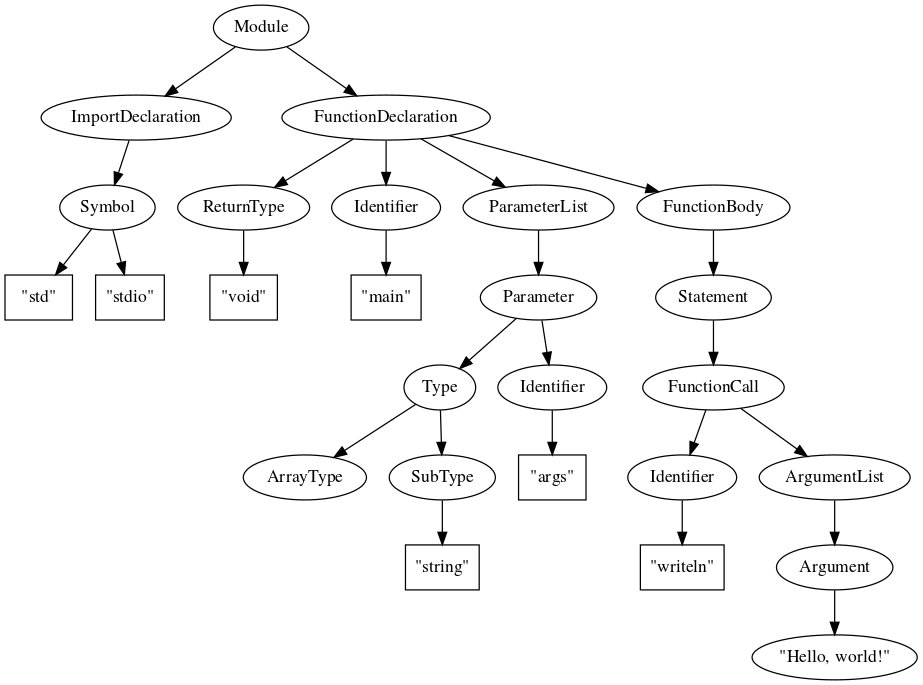

| + | For example, this program: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | import std.stdio; | ||

| + | void main(string[] args) | ||

| + | { | ||

| + | writeln("Hello, world!"); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | is parsed into something resembling this: | ||

| + | |||

| + | [[Image:AST.svg]] | ||

| + | |||

| + | (Note: this is not the actual AST created by the compiler; it is only an example to illustrate the point. The AST created by the compiler may differ slightly in structure and/or details.) | ||

| + | |||

| + | The AST represents the structure of the program as seen by the compiler, and contains everything the compiler needs to eventually transform the program into executable machine code. | ||

| + | |||

| + | One key point to note here is that in this AST, there are no such things as variables, memory, or input and output. At this stage of compilation, the compiler has only gone as far as building a model of the program structure. In this structure, we have identifiers like <code>args</code> and <code>writeln</code>, but the compiler has not yet attached semantic meanings to them yet. That will be done in a later stage of compilation. | ||

| + | |||

| + | Part of D's powerful "compile-time" capabilities stem from the ability to manipulate this AST to some extent as the program is being compiled. Among the features that D offers are templates and <code>static if</code>. | ||

| + | |||

| + | ===Templates=== | ||

| + | |||

| + | :''If you are already familiar with the basics of templates, you may want to skip to the following section.'' | ||

| + | |||

| + | One of D's powerful features is templates, which are similar to C++ templates. Templates can be thought of as ''code stencils'', or AST patterns that can be used to generate AST subtrees. For example, consider the following template struct: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct Box(T) | ||

| + | { | ||

| + | T data; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | In D, this is a shorthand for a so-called ''eponymous template'' that, written out in full, is: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | template Box(T) | ||

| + | { | ||

| + | struct Box | ||

| + | { | ||

| + | T data; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

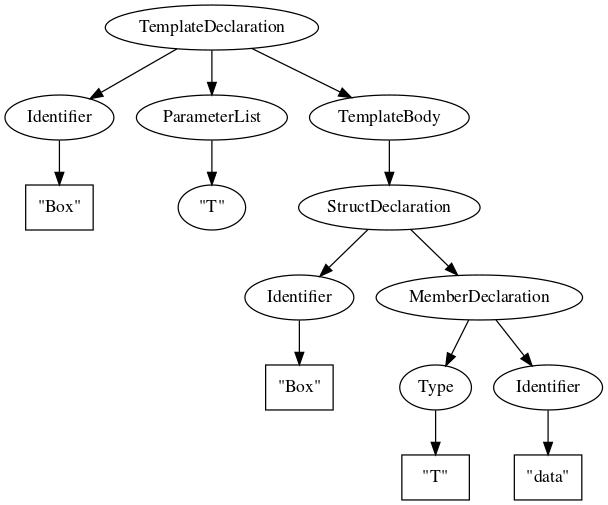

| + | Its corresponding AST tree looks something like this: | ||

| + | |||

| + | [[Image:template1.svg]] | ||

| + | |||

| + | When you instantiate the template with a declaration like: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | Box!int intBox; | ||

| + | </syntaxhighlight> | ||

| + | |||

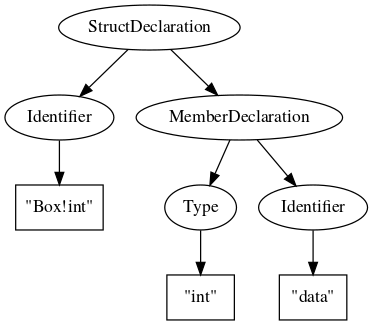

| + | for example, the type <code>Box!int</code> may not yet be defined. However, the compiler automatically makes a copy of the AST subtree under the <code>TemplateBody</code> node and substitute <code>int</code> for every occurrence of <code>T</code> in it. This generated AST subtree is then included among the program's declarations: | ||

| + | |||

| + | [[Image:Template-example1.svg]] | ||

| + | |||

| + | Which corresponds to this code fragment: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct Box!int | ||

| + | { | ||

| + | int data; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | (Note that you cannot actually write this in your source code; the name <code>Box!int</code> is reserved for the template expansion process and cannot be directly defined by user code.) | ||

| + | |||

| + | Similarly, if you instantiate the same template with a different declaration, such as: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | Box!float floatBox; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | it is as if you had declared something like this: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct Box!float | ||

| + | { | ||

| + | float data; | ||

| + | } | ||

| + | |||

| + | Box!float floatBox; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | In effect, you are creating new AST subtrees every time you instantiate a template, which get grafted into your program's AST. | ||

| + | |||

| + | One common use for this feature is to avoid boilerplate code: you can factor out commonly-repeated bits of code into a template, and the compiler would automatically insert them each time you instantiate the template. This saves you a lot of repetitive typing, and thereby allows you to adhere to the DRY (Don't Repeat Yourself) principle. | ||

| + | |||

| + | D templates, of course, go much, much farther than this, but a full exposition of templates is beyond the scope of this article. | ||

| + | |||

| + | The important point here is that template expansion happens in the AST manipulation phase of compilation, and therefore template arguments must be known ''at the time the code in question is in its AST manipulation phase''. In common D parlance, we tend to say that template arguments must be known "at compile-time", but this is often not precise enough. It is more precise to say that template arguments must be known during the AST manipulation phase of compilation. As we shall see later, being more precise will help us understand and avoid many of the common problems that D learners encounter when they try to use D's "compile-time" features. | ||

| + | |||

| + | ===static if=== | ||

| + | |||

| + | Another powerful feature in the AST manipulation phase of D compilation is <code>static if</code>. This construct allows you to choose a subtree of the AST to be compiled, or, conversely, which a subtree to be pruned. For example: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct S(bool b) | ||

| + | { | ||

| + | static if (b) | ||

| + | int x; | ||

| + | else | ||

| + | float y; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | The <code>static if</code> here means that the boolean parameter <code>b</code> is evaluated when the compiler is expanding the template <code>S</code>. The value of must be known ''at the time the template is being expanded''. In D circles, we often say that the value must be known "at compile-time", but it is important to more precise. We will elaborate on this more later. | ||

| + | |||

| + | If the value of <code>b</code> is <code>true</code>, then the <code>else</code> branch of the <code>static if</code> is pruned away from the expanded template. That is, when you write: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | S!true s; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | it is as if you declared: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct S!true | ||

| + | { | ||

| + | int x; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | ''(You can't actually write this in your source code, of course, since the name <code>S!true</code> is reserved for the template expansion process and cannot be directly defined by user code. But this is just to illustrate the point.)'' | ||

| + | |||

| + | Note that the <code>else</code> branch is ''completely absent'' from the expanded template. This is a very important point. | ||

| + | |||

| + | Similarly, when you write: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | S!false t; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | it is as if you had declared: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | struct S!false | ||

| + | { | ||

| + | float y; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Note that the <code>if</code> branch is ''completely absent'' from the expanded template. This is also a very important point. | ||

| + | |||

| + | In other words, <code>static if</code> is a choice that affects the effective AST seen by later compilation phases. | ||

| + | |||

| + | To drive this point home further: at this stage, when <code>static if</code> is evaluated, notions like variables, memory, and I/O don't exist yet. We are manipulating the ''structure'' of the program; ''not'' its execution. In the above code, <code>x</code> and <code>y</code> are merely identifiers; they have not yet been assigned any semantic meanings like variables or data fields that reside in a concrete offset in the <code>struct</code>. This is done in subsequent stages of compilation. | ||

| + | |||

| + | Why is this point so important? Because it relates to a common misunderstanding of D's "compile-time" capabilities as it relates to CTFE, or Compile-Time Function Evaluation. Let's talk about that next. | ||

| + | |||

| + | ==CTFE== | ||

| + | |||

| + | CTFE stands for Compile-Time Function Evaluation. This is an extremely powerful feature that D offers, and is similar to C++'s <code>constexpr</code> feature (though in the author's opinion far more powerful). | ||

| + | |||

| + | The first and most important thing to understand about CTFE is that it happens ''after the AST manipulation phase has been completed''. More precisely, it happens when the compiler has "finalized" the AST of that part of the program, and is performing semantic analysis on it. Identifiers are assigned meanings as modules, functions, function arguments, variables, and so on, control-flow constructs like <code>if</code> and <code>foreach</code> are given their meanings, and other semantic analyses such as VRP (Value Range Propagation) are performed. | ||

| + | |||

| + | ===Constant-folding=== | ||

| + | |||

| + | Part of this semantic analysis is ''constant-folding''. For example, if we wrote something like this: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int i = 3*(5 + 7); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | it would be a waste of computational resources to perform the indicated calculation (add 5 to 7, multiply the result by 3) at runtime, because all the arguments of the calculation are constants known to the compiler, and the result will never change at runtime. Of course, this particular example is trivial, but imagine if this line of code were inside a busy inner loop in a performance-critical part of the program. If we ''folded'' this constant expression into its resulting value, the program would run faster, since it wouldn't have to repeat this same calculation over and over, and indeed, it wouldn't even need to perform the calculation at all, since the answer is already known by the compiler. The answer could be directly assigned to <code>i</code>: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int i = 36; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This process is called ''constant-folding'', and is implemented by basically all modern compilers of any language today. So it is nothing new. The D compiler, however, takes this game to a whole new level. | ||

| + | |||

| + | ===Constant-folding, glorified=== | ||

| + | |||

| + | Consider, for example, this code: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int f(int x) | ||

| + | { | ||

| + | return x + 1; | ||

| + | } | ||

| + | |||

| + | int i = 3*(5 + f(6)); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Again, if we perform the calculation manually, we see that the value of the expression is 36. However, this time, the constants involved in the expression are masked by the function call to <code>f</code>. But since the definition of <code>f</code> is visible to the compiler, and all it does is to add a constant value 1 to its argument, the compiler should be able to deduce that, again, the expression is constant and does not need to be performed at runtime. | ||

| + | |||

| + | But what if <code>f</code> was more complicated than merely adding a constant to its argument, for example: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int f(int x) | ||

| + | { | ||

| + | int a = 0; | ||

| + | for (int i=1; i <= x/2; i++) | ||

| + | { | ||

| + | a += i; | ||

| + | } | ||

| + | return a + 1; | ||

| + | } | ||

| + | |||

| + | int i = 3*(5 + f(6)); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Again, the value of <code>f(6)</code> is constant, but in order to know its value, the compiler has to effectively ''run this function during compilation''. And in order to do that, the compiler essentially has to compile the body of <code>f</code> into a state where it can be run inside a D interpreter embedded inside the compiler. | ||

| + | |||

| + | This, in a nutshell, is how CTFE came about in the history of D. There is a limit as to how much this D virtual machine can do, but there's an ongoing trend of expanding its capabilities as far as possible. As of this writing, the D compiler is able to execute significant chunks of the Phobos standard library during compilation, thus making many library features accessible during compilation without needing to implement them the second time inside the compiler. Furthermore, a focused effort is being spearheaded by Stefan Koch to replace the current CTFE engine with an even more powerful one based on a bytecode interpreter, that promises superior CTFE performance and better memory management, and eventually, more features. | ||

| + | |||

| + | ===Forcing CTFE evaluation=== | ||

| + | |||

| + | Of course, the compiler usually does not always go this far in attempting to constant-fold an expression. Past a certain level of complexity, it makes more sense for the compiler to simply leave it up to the generated runtime code to compute the value of the expression. After all, it may be that the entire purpose of the program is to compute the constant answer to a complex mathematical problem. It wouldn't make sense to perform such a computation in the slower CTFE engine instead of letting the generated executable run the computation at native execution speed. | ||

| + | |||

| + | Being ''able'' to perform such a computation at compile-time when needed, however, can be very useful. For example, you could precompute values for a lookup table that gets stored into the executable, so that there is no runtime cost associated with initializing the table when the program starts up. As such, it is sometimes desirable to ''force'' the compiler to evaluate an expression at compile-time rather than relegating it to runtime. The usual idiom is to assign the result of the computation to a construct that requires the value to be known at compile-time, such as an <code>enum</code> or a template parameter. For example: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int complicatedComputation(int x, int y) | ||

| + | { | ||

| + | return ...; /* insert complicated computation here */ | ||

| + | } | ||

| + | |||

| + | void main() | ||

| + | { | ||

| + | // The compiler may choose to evaluate this at compile-time or not, | ||

| + | // depending on how complex the computation is. | ||

| + | int i = complicatedComputation(123, 456); | ||

| + | |||

| + | // Since the value of an enum must be known at compile-time, the | ||

| + | // compiler has no choice but to evaluate it in CTFE. This is the | ||

| + | // standard idiom for forcing CTFE evaluation of an expression. | ||

| + | enum j = complicatedComputation(123, 456); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | In discussions among D users, when CTFE is mentioned it is usually in this context, where CTFE evaluation is forced because the value of an expression must be known at compile-time. | ||

| + | |||

| + | ==A different "compile-time"== | ||

| + | |||

| + | Coming back to the topic at hand, though, notice that when we speak of CTFE, we speak of "virtual machines" and "bytecode interpreters". This implies that by this point, the code has gone far enough through the compilation process that it is essentially ready to be turned into runtime executable code. | ||

| + | |||

| + | In particular, this means that it has long passed the AST manipulation stage. Which in turn implies that code that can be evaluated by CTFE ''can no longer make use of AST manipulation constructs'' like <code>static if</code>. In order for CTFE to work, semantic notions such as variables and memory must have been assigned to various constructs in the code, otherwise there is nothing to execute or interpret. But in the AST manipulation phase, such semantics have not yet been assigned -- we're still manipulating the structure of the program. | ||

| + | |||

| + | Thus, even though CTFE happens at "compile-time" just as AST manipulation happens at "compile-time", this is actually a different "compile-time". It is much closer to "runtime" than AST manipulation, which represents a much earlier stage in the compilation process. This is why the terminology "compile-time" is confusing: it gives the false impression that all of these features, AST manipulation and CTFE alike, are lumped together into a single, amorphous blob of time labelled "compile-time", and that the compiler can somehow make it all magically just work, by fiat. | ||

| + | |||

| + | The point to take away from all this, is that AST manipulation constructs are applied first, and then the code may be used in CTFE later: | ||

| + | |||

| + | :AST manipulation → CTFE | ||

| + | |||

| + | The unidirectional arrow indicates that a piece of code can only move from AST manipulation to CTFE, but never the other way round. | ||

| + | |||

| + | Of course, in practice, this simple picture is only part of the story. To understand how it all works, it's best to look at actual code examples. So let's now take a look at a few common pitfalls that D learners often run into, and see how this principle applies in practice. | ||

| + | |||

| + | ==Case Study: Reading CTFE variables at AST manipulation time== | ||

| + | |||

| + | A rather common complaint that's brought up in the D forums from time to time pertains to code along these lines: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(bool b) | ||

| + | { | ||

| + | static if (b) // <--- compile error at this line | ||

| + | return 1; | ||

| + | else | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | // N.B.: the enum forces the compiler to evaluate the function in CTFE | ||

| + | enum myInt = ctfeFunc(true); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | If you try to compile the above code, the compiler will complain that the <code>static if</code> cannot read the value of <code>b</code> at "compile-time". Which almost certainly elicits the reaction, "What??! What do you mean you can't read <code>b</code> at compile-time?! Aren't you running this code in CTFE, which is by definition compile-time, with a value of <code>b</code> that's obviously known at compile-time?" | ||

| + | |||

| + | On the surface, this would appear to be a glaring bug in the compiler, or a glaring shortcoming in D's "compile-time" capabilities, and/or an astonishing lack of competence on the part of the D compiler authors in failing to notice a problem in such an obvious and simple use case for CTFE. | ||

| + | |||

| + | If we understand what's really going on, however, we would see why the compiler rejected this code. Remember that during the process of compilation, the D compiler first creates an AST of the code, evaluating any <code>static if</code>s that may change the shape of the resulting AST. | ||

| + | |||

| + | So when the compiler first encounters the declaration of <code>ctfeFunc</code>, it scans its body and sees the <code>static if (b)</code> while building the AST for this function. If the value of <code>b</code> is <code>true</code>, then it would emit the AST tree that essentially corresponds with: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(bool b) | ||

| + | { | ||

| + | return 1; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | (Recall that in the AST manipulation stage, the false branch of a <code>static if</code> is discarded from the resulting AST, and it is as if it wasn't even there. So the <code>return 0</code> doesn't even make it past this stage.) | ||

| + | |||

| + | If the value of <code>b</code> is <code>false</code>, then it would emit the AST tree that corresponds with: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(bool b) | ||

| + | { | ||

| + | return 0; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | There is a problem here, however. The value of <code>b</code> is unknown at this point. All the compiler knows about <code>b</code> at this point is that it's an identifier representing a parameter of the function. Semantics such as what values it might hold haven't been attached to it yet. In fact, the compiler hasn't even gotten to the <code>enum</code> line that calls <code>ctfeFunc</code> with an argument of <code>true</code> yet! | ||

| + | |||

| + | And even if the compiler ''did'' get to the <code>enum</code> line, it wouldn't have been able to assign a value to <code>b</code>, because the function's AST is still not fully processed yet. You can't assign values to identifiers in an AST that hasn't been fully constructed yet, because the meaning of the identifiers may change once the AST is altered. It is simply too early at this point to meaningfully assign any value to <code>b</code>. The notion of assigning a value to a parameter is a semantic concept that can only be applied ''after'' the AST manipulation phase. But in order to fully process the AST, the compiler needs to know the value of <code>b</code>. Yet the value of <code>b</code> cannot be known until after the AST has been processed. This is an insoluble impasse, so the compiler gives up and emits a compile error. | ||

| + | |||

| + | ===Solution 1: Make it available during AST manipulation=== | ||

| + | |||

| + | One possible solution to this impasse is to make the value of <code>b</code> available during the AST manipulation phase. The simplest way to do this is to turn <code>ctfeFunc</code> into a template function with <code>b</code> as a template parameter, with the corresponding change in the <code>enum</code> line to pass <code>true</code> as a template argument rather than a runtime argument: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(bool b)() // N.B.: the first set of parentheses enclose template parameters | ||

| + | { | ||

| + | static if (b) // Now this compiles without error | ||

| + | return 1; | ||

| + | else | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | enum myInt = ctfeFunc!true; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Since <code>b</code> is now a template argument, its value is known during AST manipulation, and so the <code>static if</code> can be compiled without any problems. | ||

| + | |||

| + | ===Solution 2: Do everything during AST manipulation instead=== | ||

| + | |||

| + | The foregoing solution works, but if we consider it more carefully, we will see that we can take it further. Look at it again from the standpoint of the AST manipulation. After the AST manipulation phase, the function has essentially become: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc() // N.B.: template parameters no longer exist after AST manipulation phase | ||

| + | { | ||

| + | return 1; // N.B.: else branch of static if has been discarded | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This means that CTFE wasn't actually necessary to evaluate this function in the first place! We could have just as easily declared <b>ctfeFunc</b> as a template, completely evaluated in the AST manipulation phase. (And we might as well also rename it to something other than <code>ctfeFunc</code>, since it would be no longer evaluated in CTFE, and no longer even a function): | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | template Value(bool b) | ||

| + | { | ||

| + | static if (b) | ||

| + | enum Value = 1; | ||

| + | else | ||

| + | enum Value = 0; | ||

| + | } | ||

| + | |||

| + | enum myVal = Value!true; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Now <code>myVal</code> can be completely evaluated at the AST manipulation phase, and CTFE doesn't even need to be involved. | ||

| + | |||

| + | ===Solution 3: Move everything to CTFE=== | ||

| + | |||

| + | There is another approach, however. Although the example we have given is rather trivial, in practice CTFE functions tend to be a lot more involved than a mere if-condition over a boolean parameter. Some functions may not be amenable to be rewritten in template form. So what do we do in that case? | ||

| + | |||

| + | The answer may surprise you: get rid of the <code>static if</code>, and replace it with a plain old "runtime" <code>if</code>, like this: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(bool b) | ||

| + | { | ||

| + | if (b) // <--- N.B.: regular if, not static if | ||

| + | return 1; | ||

| + | else | ||

| + | return 0; | ||

| + | } | ||

| + | |||

| + | // N.B.: the enum forces the compiler to evaluate the function in CTFE | ||

| + | enum myInt = ctfeFunc(true); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | And, miracle of miracles, this code compiles without any error, and with the correct value for <code>myInt</code>! But wait a minute. What's going on here? How can changing <code>static if</code> to <code>if</code>, ostensibly a "runtime" construct, possibly work for a value that is needed at "compile-time"? Is the compiler cheating and secretly turning <code>myInt</code> into a runtime computation behind our backs? | ||

| + | |||

| + | Actually, inspecting the executable code with a disassembler shows that no such cheating is happening. So how does this work? | ||

| + | |||

| + | ==Interleaved AST manipulation and CTFE== | ||

| + | |||

| + | Let's take a close look. This time, during the AST manipulation phase, nothing much happens besides the usual construction of the AST corresponding with <code>ctfeFunc</code>. There are no <code>static if</code>s or other template-related constructs anymore, so the resulting AST is as straightforward as it can get. Then the compiler sees the <code>enum</code> declaration and realizes that it needs to evaluate <code>ctfeFunc</code> at "compile-time". | ||

| + | |||

| + | Here is where something interesting happens. Based on the above discussion, you may think, the compiler is still in the "AST manipulation" stage (because it hasn't fully constructed the AST for <code>myInt</code> yet), so wouldn't this fail, since <code>ctfeFunc</code> hasn't gotten to the semantic analysis phase yet? Note, however, that while it is certainly true that the AST for <code>myInt</code> hasn't been fully resolved yet (and therefore we can't read the value of <code>myInt</code> from CTFE at this point in time), the AST for <code>ctfeFunc</code>, by this point, ''is'' already ready to proceed to the next stage of compilation. Or, more precisely, ''the subtree of the program's AST corresponding with <code>ctfeFunc</code> is complete,'' and can be handed over to semantic analysis now. | ||

| + | |||

| + | So the D compiler, being pretty smart about these things, realizes that it can go ahead with the semantic analysis of <code>ctfeFunc</code> ''independently of the fact that other parts of the program's AST, namely the declaration of <code>myInt</code>, aren't completed yet.'' This works because <code>ctfeFunc</code>'s subtree of the AST can be semantically analyzed as a unit on its own, without depending on <code>myInt</code>'s subtree of the AST at all! (It would fail if <code>ctfeFunc</code> somehow depended on the value of <code>myInt</code>, though.) | ||

| + | |||

| + | Thus, the compiler applies semantic analysis to the AST of <code>ctfeFunc</code> and brings it to the point where it can be interpreted by the CTFE engine. The CTFE engine is then invoked to run <code>ctfeFunc</code> with the value <code>true</code> as its argument -- essentially simulating what it would have done at runtime. The return value of 1 is then handed back to the AST manipulation code that's still waiting to finish processing the AST for <code>myInt</code>, upon which the latter AST also becomes fully constructed. | ||

| + | |||

| + | Perhaps this "smart" invocation of CTFE interleaved with AST manipulation is part of what imparts some validity to the term "compile-time" as referring to the entire process as a whole. Hopefully, though, by now you have also acquired a better understanding of why there are really (at least) two different "compile-times": an early phase where AST manipulation happens, and a later phase with CTFE which is very much like runtime except that it just so happens to be done inside the compiler. These phases may be applied to different parts of the program's AST at different times, though with respect to each part of the AST they are ''always'' in the order of AST manipulation first, and then CTFE. AST manipulation always happens on any given subtree of the AST ''before'' CTFE can be applied to that subtree, and CTFE can run on a given subtree of the AST only if the entire subtree has already passed the AST manipulation phase. | ||

| + | |||

| + | This interleaving of AST manipulation and CTFE is what makes D's "compile-time" features so powerful: you can perform arbitrarily complex computations inside CTFE (subject to certain limitations of the CTFE engine, of course), and then use the result to manipulate the AST of another part of the program. You can even have that other part of the program also pass through CTFE, and use the result of ''that'' to affect the AST of a third part of the program, and so on, all as part of the compilation process. This is one of the keystones of metaprogramming in D. | ||

| + | |||

| + | ==Case Study: pragma(msg) and CTFE== | ||

| + | |||

| + | Another common complaint by D learners arises from trying to debug CTFE functions, and pertains to the "strange" way in which <code>pragma(msg)</code> behaves in CTFE. | ||

| + | |||

| + | First of all, if you're not familiar with it, <code>pragma(msg)</code> is a handy compiler feature that lets you debug certain compile-time processes. When the <code>pragma(msg)</code> directive is processed by the compiler, the compiler outputs whatever message is specified as arguments to the <code>pragma</code>. For example: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | template MyTemplate(T) | ||

| + | { | ||

| + | pragma(msg, "instantiating MyTemplate with T=" ~ T.stringof); | ||

| + | // ... insert actual code here | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This causes the compiler to print "instantiating MyTemplate with T=int" when <code>MyTemplate!int</code> is instantiated, and to print "instantiating MyTemplate with T=float" when <code>MyTemplate!float</code> is instantiated. This can be a useful debugging tool to trace exactly what instantiations are being used in the code. | ||

| + | |||

| + | So far so good. | ||

| + | |||

| + | Complaints, however, tend to arise when people attempt to do things like this: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int ctfeFunc(int x) | ||

| + | { | ||

| + | if (x < 100) | ||

| + | return x; | ||

| + | else | ||

| + | { | ||

| + | pragma(msg, "bad value passed in"); | ||

| + | return -1; | ||

| + | } | ||

| + | } | ||

| + | enum y = ctfeFunc(50); // N.B.: enum forces CTFE on ctfeFunc | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Even though the argument 50 is well within the bounds of what <code>ctfeFunc</code> can handle, the compiler persistently prints "bad value passed in". And it does the same if the argument is changed to something the function ostensibly rejects, like 101. What gives? | ||

| + | |||

| + | "Compiler bug!" some would scream. | ||

| + | |||

| + | By now, however, you should be able to guess at the answer: <code>pragma(msg)</code> is a construct that pertains to the AST manipulation phase. The compiler prints the message while it is building the AST of <code>ctfeFunc</code>, well before it even knows that it needs to invoke CTFE on it. The <code>pragma(msg)</code> is then discarded from the AST. As we have mentioned, during the AST manipulation phase the compiler has not yet attached any meaning to <code>if</code> or any value to the identifier <code>x</code>; these are seen merely as syntax nodes to be attached to the AST being built. So the <code>pragma(msg)</code> is processed without any regard to the semantics of the surrounding code -- said semantics haven't been attached to the AST yet! Since there are no AST manipulation constructs that would prune away the subtree containing the <code>pragma(msg)</code>, the specified message is ''always'' printed regardless of what the value of <code>x</code> will be in CTFE. By the time this function gets to CTFE, the <code>pragma(msg)</code> has already been discarded from the AST, and the CTFE engine doesn't even see it. | ||

| + | |||

| + | ==Case Study: static if and __ctfe== | ||

| + | |||

| + | Another common source of misunderstandings is the built-in magic variable <code>__ctfe</code>. This variable evaluates to <code>true</code> when inside the CTFE engine, but always evaluates to <code>false</code> at runtime. It is useful for working around CTFE engine limitations when your code contains constructs that work fine at runtime, but aren't currently supported in CTFE. It can also be used for optimizing your code for the CTFE engine by taking advantage of its known performance characteristics. | ||

| + | |||

| + | As a simple example, as of this writing <code>std.array.appender</code> is generally recommended for use at runtime when you're appending a large number of items to an array. However, due to the way the current CTFE engine works, it is better to simply use the built-in array append operator <code>~</code> when inside the CTFE engine. Doing so would reduce the memory footprint of CTFE, and probably improve compilation speed as well. So you would test the <code>__ctfe</code> variable in your code and choose the respective implementation depending on whether it is running in CTFE or at runtime. | ||

| + | |||

| + | Since <code>__ctfe</code> is, ostensibly, a "compile-time" variable, useful only for targeting the CTFE engine, a newcomer to D may be tempted to write the code like this: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int[] buildArray() | ||

| + | { | ||

| + | static if (__ctfe) // <-- this is line 3 | ||

| + | { | ||

| + | // We're in CTFE: just use ~= for appending | ||

| + | int[] result; | ||

| + | foreach (i; 0 .. 1_000_000) | ||

| + | result ~= i; | ||

| + | return result; | ||

| + | } | ||

| + | else | ||

| + | { | ||

| + | // This is runtime, use appender() for faster performance | ||

| + | import std.array : appender; | ||

| + | auto app = appender!(int[]); | ||

| + | foreach (i; 0 .. 1_000_000) | ||

| + | app.put(i); | ||

| + | return app.data; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This code, unfortunately, gets rejected by the compiler: | ||

| + | |||

| + | <syntaxhighlight lang=bash> | ||

| + | test.d(3): Error: variable __ctfe cannot be read at compile time | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | which, almost certainly, elicits the response, "What?! What do you mean <code>__ctfe</code> cannot be read at compile time?! Isn't it specifically designed to work in CTFE, which is a compile-time feature??" | ||

| + | |||

| + | Knowing what we now know, however, we can understand why this doesn't work: <code>static if</code> is a construct that pertains to the AST manipulation phase of the code, whereas <code>__ctfe</code> is clearly something specific to the later CTFE phase. At the AST manipulation phase of compilation, the compiler doesn't even know whether <code>buildArray</code> is going to be evaluated by CTFE or not. In fact, it hasn't even assigned a semantic meaning to the identifier <code>__ctfe</code> yet, because semantic analysis is not performed until the construction of the AST has been completed. Identifiers are not assigned concrete meanings until semantic analysis is done. So even though both <code>static if</code> and <code>__ctfe</code> are "compile-time" features, the former relates to an earlier phase of compilation, and the latter to a later phase. Again, we see that conflating the two under the blanket term "compile-time" leads to confusion. | ||

| + | |||

| + | ===Solution: Just use if=== | ||

| + | |||

| + | The solution is simple: just replace <code>static if</code> with <code>if</code>: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | int[] buildArray() | ||

| + | { | ||

| + | if (__ctfe) // <--- N.B. no longer static if | ||

| + | { | ||

| + | // We're in CTFE: just use ~= for appending | ||

| + | int[] result; | ||

| + | foreach (i; 0 .. 1_000_000) | ||

| + | result ~= i; | ||

| + | return result; | ||

| + | } | ||

| + | else | ||

| + | { | ||

| + | // This is runtime, use appender() for faster performance | ||

| + | import std.array : appender; | ||

| + | auto app = appender!(int[]); | ||

| + | foreach (i; 0 .. 1_000_000) | ||

| + | app.put(i); | ||

| + | return app.data; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Now <code>buildArray</code> works correctly, because the AST of this function can be fully built, analysed, and then, if necessary, passed to the CTFE engine for execution. When the CTFE engine interprets the code, it can then assign semantic meaning to <code>__ctfe</code> and take the true branch of the if-statement. At runtime, <code>__ctfe</code> is always <code>false</code> and the false branch is always taken. | ||

| + | |||

| + | ===But what of runtime performance?=== | ||

| + | |||

| + | One question that may still be lingering, though, is whether this "non-static" <code>if</code>, ostensibly a runtime construct, would generate redundant code in the executable. Since <code>__ctfe</code> will always be false at runtime, it would be a waste of CPU resources to always perform an unconditional branch over the CTFE-specific version of the code. On modern CPUs, branches can cause the instruction pipeline to stall, leading to poor performance. The CTFE-specific part of the code would also be dead weight, taking up space in the executable and using up memory at runtime, but serving no purpose since it will never be run. | ||

| + | |||

| + | Compiling the code and examining it with a disassembler, however, shows that such fears are unfounded: the branch is elided by the compiler's code generator because the value of <code>__ctfe</code> is statically <code>false</code> outside of the CTFE engine. So the optimizer sees that the true branch of the if-statement is dead code, and simply omits it from the generated object code. There is no performance penalty and no additional dead code in the executable. | ||

| + | |||

| + | ==Case Study: foreach over a type list== | ||

| + | |||

| + | Let's now turn to something that might trip up even experienced D coders. Consider the following function: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | void func(Args...)(Args args) | ||

| + | { | ||

| + | foreach (a; args) | ||

| + | { | ||

| + | static if (is(typeof(a) == int)) | ||

| + | { | ||

| + | pragma(msg, "is an int"); | ||

| + | continue; | ||

| + | } | ||

| + | pragma(msg, "not an int"); | ||

| + | } | ||

| + | } | ||

| + | void main() | ||

| + | { | ||

| + | func(1); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Trick question: what is the compiler's output when this program is compiled? | ||

| + | |||

| + | If your answer is "is an int", you're wrong. | ||

| + | |||

| + | Here's the output: | ||

| + | |||

| + | <syntaxhighlight lang=bash> | ||

| + | is an int | ||

| + | not an int | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Now wait a minute! Surely this is a bug? There is only one argument passed to <code>func</code>; how could it possibly have ''two'' lines of output? | ||

| + | |||

| + | Let's go through the paces of what we've learned so far, and see if we can figure this out. | ||

| + | |||

| + | First, <code>func</code> is a template function, so it is not semantically analyzed until it is instantiated by a function call that specifies its argument types. This happens when the compiler is processing the <code>main</code> function, and sees the call <code>func(1)</code>. So, by IFTI (Implicit Function Template Instantiation -- the process of inferring the template arguments to a template function by looking at the types of the runtime arguments that are passed to it), the compiler assigns <code>Args</code> to be the single-member sequence <code>(int)</code>. | ||

| + | |||

| + | That is, the function call is translated as: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | func!(int)(1); | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This causes the instantiation of <code>func</code>, which causes the compiler to build an AST for this particular instantiation based on the template body -- i.e., it enters the AST manipulation phase for the (copy of the) function body. | ||

| + | |||

| + | ===Automatic unrolling=== | ||

| + | |||

| + | There is a <code>foreach</code> over <code>args</code>. There's a tricky bit involved here, in that this <code>foreach</code> isn't just any <code>foreach</code> loop; it is a loop over variadic arguments. Such loops are treated specially in D: they are automatically unrolled. In AST terms, this means that the compiler will generate n copies of the AST for the loop body, once per iteration. Note also that this is done at the AST manipulation phase; ''there is no CTFE involved here''. This kind of <code>foreach</code> loop is different from the usual "runtime" <code>foreach</code>. | ||

| + | |||

| + | Then the compiler processes the loop body, and sees <code>static if</code>. Since the condition is true (the current element being looped over, which is also the only argument to the function, is an <code>int</code> with a value of 1), the compiler expands the <code>true</code> branch of the <code>static if</code>. | ||

| + | |||

| + | Then it sees the <code>pragma(msg)</code>, and emits the message "is an int". | ||

| + | |||

| + | Following that, it sees <code>continue</code>. And here's the important point: since we are in AST manipulation phase, <code>continue</code> is just another syntax node to be attached to the AST being built. The <code>continue</code> is not interpreted by the AST manipulation phase! | ||

| + | |||

| + | And so, moving on to the next item in the loop body, the AST manipulation code sees another <code>pragma(msg)</code> and outputs "not an int". | ||

| + | |||

| + | It is important to note here, and we repeat for emphasis, that: | ||

| + | # CTFE is ''not involved'' here; the loop unrolling happens in the AST manipulation phase, not in CTFE; | ||

| + | # the <code>continue</code> is not interpreted by the AST manipulation phase, but is left in the AST to be translated into code later on. | ||

| + | |||

| + | ===foreach over a type list does NOT interpret break and continue=== | ||

| + | |||

| + | This last point is worth elaborating on, because even advanced D users may be misled to think that foreach over a type list interprets <code>break</code> and <code>continue</code> specially, i.e., during the unrolling of the loop. The next code snippet illustrates this point: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | import std.stdio; | ||

| + | void func(Args...)(Args args) | ||

| + | { | ||

| + | foreach (arg; args) // N.B.: this is foreach over a type list | ||

| + | { | ||

| + | static if (is(typeof(arg) == int)) | ||

| + | continue; | ||

| + | |||

| + | writeln(arg); | ||

| + | |||

| + | static if (is(typeof(arg) == string)) | ||

| + | break; | ||

| + | |||

| + | writeln("Got to end of loop body with ", arg); | ||

| + | } | ||

| + | } | ||

| + | void main() | ||

| + | { | ||

| + | func(1, "abc", 3.14159); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | What do you think is the output of this program? (Not a trick question.) | ||

| + | |||

| + | Here's the output: | ||

| + | |||

| + | <syntaxhighlight lang=bash> | ||

| + | abc | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This seems to confirm our initial hypothesis that <code>continue</code> and <code>break</code> are interpreted by the foreach, such that the first argument, which is an <code>int</code>, causes the rest of the loop body to be skipped until the next iteration, and the second argument, which is a <code>string</code>, breaks out of the loop itself and thus causes the loop unrolling to be interrupted. | ||

| + | |||

| + | However, this is not true, as can be proven by replacing the last <code>writeln</code> with a <code>static assert</code>: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | import std.stdio; | ||

| + | void func(Args...)(Args args) | ||

| + | { | ||

| + | foreach (arg; args) // N.B.: this is foreach over a type list | ||

| + | { | ||

| + | static if (is(typeof(arg) == int)) | ||

| + | continue; | ||

| + | |||

| + | writeln(arg); | ||

| + | |||

| + | static if (is(typeof(arg) == string)) | ||

| + | break; | ||

| + | |||

| + | // This should be true, right? | ||

| + | // Since the string case has broken out of the loop? | ||

| + | static assert(!is(typeof(arg) == string)); // line 16 | ||

| + | } | ||

| + | } | ||

| + | void main() | ||

| + | { | ||

| + | func(1, "abc", 3.14159); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Here is what the compiler has to say: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | test.d(16): Error: static assert (!true) is false | ||

| + | test.d(21): instantiated from here: func!(int, string, double) | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | What's going on here? | ||

| + | |||

| + | It seems counterintuitive, but it's actually very simple, and should be readily understandable now that we have a clearer idea of the role of AST manipulation. Simply put, the foreach does ''not'' interpret <code>continue</code> and <code>break</code> at all during the AST manipulation phase. They are treated merely as nodes in the AST being constructed, and thus the compiler continues process the rest of the loop body. Thus, the <code>static assert</code> is evaluated ''in all three iterations of the loop'', including the second iteration where it fails because <code>typeof(arg) == string</code>. | ||

| + | |||

| + | ===What foreach over a type list actually does=== | ||

| + | |||

| + | But if this is the case, then why does the original loop appear to obey <code>continue</code> and <code>break</code>? To answer that, let's take a look at the actual AST as printed by the D compiler <code>dmd</code> (with additional comments added by yours truly): | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | @safe void func(int _param_0, string _param_1, double _param_2) | ||

| + | { | ||

| + | import std.stdio; | ||

| + | /*unrolled*/ { | ||

| + | { | ||

| + | int arg = _param_0; | ||

| + | continue; | ||

| + | writeln(arg); // N.B.: NOT skipped! | ||

| + | } | ||

| + | { | ||

| + | string arg = _param_1; | ||

| + | writeln(arg); | ||

| + | break; | ||

| + | } | ||

| + | { | ||

| + | // N.B.: this iteration is NOT skipped! | ||

| + | double arg = _param_2; | ||

| + | writeln(arg); // N.B.: NOT skipped! | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | During code generation (which is another phase that comes after AST manipulation), however, the compiler's code generator notices that the first loop iteration begins with an unconditional branch to the next iteration. As such, the rest of the first iteration is dead code, and can be elided altogether. Similarly, in the second loop iteration, the code generator notices that there is an unconditional branch to the end of the loop, so the rest of that iteration is also dead code and can be elided. Lastly, the third loop iteration is never reached -- it is dead code, and gets elided as well. | ||

| + | |||

| + | After these elisions, what's left is: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | void func!(int, string, double)(int __arg0, string __arg1, double __arg2) | ||

| + | { | ||

| + | writeln(__arg1); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | which is what produced the observed output. | ||

| + | |||

| + | In other words, it wasn't the foreach over the type list that pruned the code following the <code>break</code> and the <code>continue</code>; it's actually the compiler's optimizer, which is part of the code generator, getting rid of dead code so that the final executable doesn't waste space on what will never be executed. | ||

| + | |||

| + | ===Possible solutions=== | ||

| + | |||

| + | The simplest solution to the conundrum posed by the original code in this case study is to use an <code>else</code> clause with the <code>static if</code>: | ||

| + | |||

| + | <syntaxhighlight lang=D> | ||

| + | void func(Args...)(Args args) | ||

| + | { | ||

| + | foreach (a; args) | ||

| + | { | ||

| + | static if (is(typeof(a) == int)) | ||

| + | { | ||

| + | pragma(msg, "is an int"); | ||

| + | continue; | ||

| + | } | ||

| + | else // <---- N.B.: else clause | ||

| + | pragma(msg, "not an int"); | ||

| + | } | ||

| + | } | ||

| + | void main() | ||

| + | { | ||

| + | func(1); | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | This ensures that the second <code>pragma(msg)</code> is correctly elided from the generated AST when the condition of the <code>static if</code> is false. | ||

| + | |||

| + | ==Summary== | ||

| + | |||

| + | In summary, we learned that there are (at least) two distinct stages of compilation that a piece of D code passes through: | ||

| + | |||

| + | # The AST manipulation phase, where templates are expanded and <code>static if</code> is processed. In this phase, we are manipulating the structure of the code itself in the form of its AST (Abstract Syntax Tree). Semantic concepts such as variables, the meaning of control-flow constructs like <code>break</code> or <code>continue</code> do not apply in this stage. | ||

| + | # The semantic analysis phase, where meaning is attached to the AST. Notions such as variables, arguments, and control-flow are applied here. AST manipulation constructs can no longer be applied at this point. CTFE (Compile-Time Function Evaluation) can only be used for code that has already passed the AST manipulation phase and the semantic analysis phase. By the time the CTFE engine sees the code, anything involving templates, <code>static if</code>, or any of the other AST manipulation features has already been processed, and the CTFE engine does not see the original AST manipulation constructs anymore. | ||

| + | |||

| + | Each piece of code passes through the AST manipulation phase and the semantic analysis phase, ''in that order'', and never the other way around. Consequently, CTFE can only run on a piece of code ''after'' it has finished its AST manipulation phase. | ||

| + | |||

| + | Nevertheless, it is possible for ''another'' part of the program to still be in the AST manipulation phase, depending on a value computed by a piece of code that has already passed the AST manipulation phase and is ready to be interpreted by the CTFE engine. This interleaving of AST manipulation and CTFE is what makes D's "compile-time" features so powerful. But it is subject to the condition that the code running in CTFE must itself have already passed its AST manipulation phase; it cannot depend on anything that hasn't reached the semantic analysis phase yet. | ||

| + | |||

| + | Mixing up AST manipulation constructs with CTFE-specific semantic notions is what causes most of the confusion and frustrations with D's "compile-time" features. | ||

Latest revision as of 16:40, 13 May 2022

- By H. S. Teoh, March 2017

One of D's oft-touted features is its awesome "compile-time" capabilities. However, said capabilities are also often the source of much confusion and misunderstanding, especially on the part of newcomers to D, often taking the form of questions posted to the discussion forum by frustrated users such as the classic: "Why can't the compiler read this value at compile-time, since it's clearly known at compile-time?!"

This article hopes to clear up most of these misunderstandings by explaining just what D's "compile-time" capabilities are, how it works, and how to solve some commonly-encountered problems.

Contents

- 1 There's compile-time, and then there's compile-time

- 2 Template expansion / AST manipulation

- 3 CTFE

- 4 A different "compile-time"

- 5 Case Study: Reading CTFE variables at AST manipulation time

- 6 Interleaved AST manipulation and CTFE

- 7 Case Study: pragma(msg) and CTFE

- 8 Case Study: static if and __ctfe

- 9 Case Study: foreach over a type list

- 10 Summary

There's compile-time, and then there's compile-time

Part of the confusion is no thanks to the overloaded term "compile-time". It sounds straightforward enough -- "compile-time" is just the time when the compiler performs its black magic of transforming human-written D code into machine-readable executables. So, the reasoning goes, if feature X is a "compile-time" feature, and feature Y is another "compile-time" feature, then surely X and Y ought to be usable in any combination, right? Since, after all, it all happens at "compile-time", and the compiler ought to be able to sort it all out magically.

The reality, of course, is a bit more involved than this. There are, roughly speaking, at least two distinct categories of D features that are commonly labelled "compile-time":

- Template expansion, or abstract syntax tree (AST) manipulation; and

- Compile-time function evaluation (CTFE).

While these two take place at "compile-time", they represent distinct phases in the process of compilation, and understanding this distinction is the key to understanding how D's "compile-time" features work.

In particular, the AST manipulation features apply to an early stage in the process of compilation, whereas CTFE applies to a much later stage. To understand this, it is helpful to know roughly the stages of compilation that a piece of code goes through:

- Lexical analysis and parsing, where the compiler scans the human-written text of the program and turns it into a syntax tree representing the structure of the code;

- AST manipulation, where templates are expanded and other AST manipulation features are applied;

- Semantic analysis, where meaning is assigned to various AST features, such as associating an identifier with a data type, another identifier with a function, and another identifier with a variable.

- Code generation, where the semantically-analyzed code is used to emit the machine code that goes into the executable.

CTFE sits somewhere between semantic analysis and code generation, and basically involves running D code inside an interpreter (called the CTFE engine) embedded inside the compiler.

Since CTFE can only take place after semantic analysis has been performed, it no longer has access to AST manipulation features. Similarly, at the AST manipulation stage, no semantics have been attached to various structures in the code yet, and so "compile-time" features that manipulate the AST can't access things inside the CTFE engine.

Thus, as far as D's "compile-time" features are concerned, there are really two different "compile-times"; an early phase involving manipulating the program's AST, and a late phase involving CTFE. Confusing these two is the source of most of the problems encountered when using D's "compile-time" features.

These two phases interact in more complex ways than it might appear at first, though. In order to understand how this works, we need to look at each of these two categories of "compile-time" features in more detail.

Template expansion / AST manipulation

One of the first things the compiler does when it compiles your code, is to transform the text of the code into what is commonly known as the Abstract Syntax Tree (AST).

For example, this program:

import std.stdio;

void main(string[] args)

{

writeln("Hello, world!");

}

is parsed into something resembling this:

(Note: this is not the actual AST created by the compiler; it is only an example to illustrate the point. The AST created by the compiler may differ slightly in structure and/or details.)

The AST represents the structure of the program as seen by the compiler, and contains everything the compiler needs to eventually transform the program into executable machine code.

One key point to note here is that in this AST, there are no such things as variables, memory, or input and output. At this stage of compilation, the compiler has only gone as far as building a model of the program structure. In this structure, we have identifiers like args and writeln, but the compiler has not yet attached semantic meanings to them yet. That will be done in a later stage of compilation.

Part of D's powerful "compile-time" capabilities stem from the ability to manipulate this AST to some extent as the program is being compiled. Among the features that D offers are templates and static if.

Templates

- If you are already familiar with the basics of templates, you may want to skip to the following section.

One of D's powerful features is templates, which are similar to C++ templates. Templates can be thought of as code stencils, or AST patterns that can be used to generate AST subtrees. For example, consider the following template struct:

struct Box(T)

{

T data;

}

In D, this is a shorthand for a so-called eponymous template that, written out in full, is:

template Box(T)

{

struct Box

{

T data;

}

}

Its corresponding AST tree looks something like this:

When you instantiate the template with a declaration like:

Box!int intBox;

for example, the type Box!int may not yet be defined. However, the compiler automatically makes a copy of the AST subtree under the TemplateBody node and substitute int for every occurrence of T in it. This generated AST subtree is then included among the program's declarations:

Which corresponds to this code fragment:

struct Box!int

{

int data;

}

(Note that you cannot actually write this in your source code; the name Box!int is reserved for the template expansion process and cannot be directly defined by user code.)

Similarly, if you instantiate the same template with a different declaration, such as:

Box!float floatBox;

it is as if you had declared something like this:

struct Box!float

{

float data;

}

Box!float floatBox;

In effect, you are creating new AST subtrees every time you instantiate a template, which get grafted into your program's AST.

One common use for this feature is to avoid boilerplate code: you can factor out commonly-repeated bits of code into a template, and the compiler would automatically insert them each time you instantiate the template. This saves you a lot of repetitive typing, and thereby allows you to adhere to the DRY (Don't Repeat Yourself) principle.

D templates, of course, go much, much farther than this, but a full exposition of templates is beyond the scope of this article.

The important point here is that template expansion happens in the AST manipulation phase of compilation, and therefore template arguments must be known at the time the code in question is in its AST manipulation phase. In common D parlance, we tend to say that template arguments must be known "at compile-time", but this is often not precise enough. It is more precise to say that template arguments must be known during the AST manipulation phase of compilation. As we shall see later, being more precise will help us understand and avoid many of the common problems that D learners encounter when they try to use D's "compile-time" features.

static if

Another powerful feature in the AST manipulation phase of D compilation is static if. This construct allows you to choose a subtree of the AST to be compiled, or, conversely, which a subtree to be pruned. For example:

struct S(bool b)

{

static if (b)

int x;

else

float y;

}

The static if here means that the boolean parameter b is evaluated when the compiler is expanding the template S. The value of must be known at the time the template is being expanded. In D circles, we often say that the value must be known "at compile-time", but it is important to more precise. We will elaborate on this more later.

If the value of b is true, then the else branch of the static if is pruned away from the expanded template. That is, when you write:

S!true s;

it is as if you declared:

struct S!true

{

int x;

}

(You can't actually write this in your source code, of course, since the name S!true is reserved for the template expansion process and cannot be directly defined by user code. But this is just to illustrate the point.)

Note that the else branch is completely absent from the expanded template. This is a very important point.

Similarly, when you write:

S!false t;

it is as if you had declared:

struct S!false

{

float y;

}

Note that the if branch is completely absent from the expanded template. This is also a very important point.

In other words, static if is a choice that affects the effective AST seen by later compilation phases.

To drive this point home further: at this stage, when static if is evaluated, notions like variables, memory, and I/O don't exist yet. We are manipulating the structure of the program; not its execution. In the above code, x and y are merely identifiers; they have not yet been assigned any semantic meanings like variables or data fields that reside in a concrete offset in the struct. This is done in subsequent stages of compilation.

Why is this point so important? Because it relates to a common misunderstanding of D's "compile-time" capabilities as it relates to CTFE, or Compile-Time Function Evaluation. Let's talk about that next.

CTFE

CTFE stands for Compile-Time Function Evaluation. This is an extremely powerful feature that D offers, and is similar to C++'s constexpr feature (though in the author's opinion far more powerful).

The first and most important thing to understand about CTFE is that it happens after the AST manipulation phase has been completed. More precisely, it happens when the compiler has "finalized" the AST of that part of the program, and is performing semantic analysis on it. Identifiers are assigned meanings as modules, functions, function arguments, variables, and so on, control-flow constructs like if and foreach are given their meanings, and other semantic analyses such as VRP (Value Range Propagation) are performed.

Constant-folding

Part of this semantic analysis is constant-folding. For example, if we wrote something like this:

int i = 3*(5 + 7);

it would be a waste of computational resources to perform the indicated calculation (add 5 to 7, multiply the result by 3) at runtime, because all the arguments of the calculation are constants known to the compiler, and the result will never change at runtime. Of course, this particular example is trivial, but imagine if this line of code were inside a busy inner loop in a performance-critical part of the program. If we folded this constant expression into its resulting value, the program would run faster, since it wouldn't have to repeat this same calculation over and over, and indeed, it wouldn't even need to perform the calculation at all, since the answer is already known by the compiler. The answer could be directly assigned to i:

int i = 36;

This process is called constant-folding, and is implemented by basically all modern compilers of any language today. So it is nothing new. The D compiler, however, takes this game to a whole new level.

Constant-folding, glorified

Consider, for example, this code:

int f(int x)

{

return x + 1;

}

int i = 3*(5 + f(6));

Again, if we perform the calculation manually, we see that the value of the expression is 36. However, this time, the constants involved in the expression are masked by the function call to f. But since the definition of f is visible to the compiler, and all it does is to add a constant value 1 to its argument, the compiler should be able to deduce that, again, the expression is constant and does not need to be performed at runtime.

But what if f was more complicated than merely adding a constant to its argument, for example:

int f(int x)

{

int a = 0;

for (int i=1; i <= x/2; i++)

{

a += i;

}

return a + 1;

}

int i = 3*(5 + f(6));

Again, the value of f(6) is constant, but in order to know its value, the compiler has to effectively run this function during compilation. And in order to do that, the compiler essentially has to compile the body of f into a state where it can be run inside a D interpreter embedded inside the compiler.

This, in a nutshell, is how CTFE came about in the history of D. There is a limit as to how much this D virtual machine can do, but there's an ongoing trend of expanding its capabilities as far as possible. As of this writing, the D compiler is able to execute significant chunks of the Phobos standard library during compilation, thus making many library features accessible during compilation without needing to implement them the second time inside the compiler. Furthermore, a focused effort is being spearheaded by Stefan Koch to replace the current CTFE engine with an even more powerful one based on a bytecode interpreter, that promises superior CTFE performance and better memory management, and eventually, more features.

Forcing CTFE evaluation

Of course, the compiler usually does not always go this far in attempting to constant-fold an expression. Past a certain level of complexity, it makes more sense for the compiler to simply leave it up to the generated runtime code to compute the value of the expression. After all, it may be that the entire purpose of the program is to compute the constant answer to a complex mathematical problem. It wouldn't make sense to perform such a computation in the slower CTFE engine instead of letting the generated executable run the computation at native execution speed.

Being able to perform such a computation at compile-time when needed, however, can be very useful. For example, you could precompute values for a lookup table that gets stored into the executable, so that there is no runtime cost associated with initializing the table when the program starts up. As such, it is sometimes desirable to force the compiler to evaluate an expression at compile-time rather than relegating it to runtime. The usual idiom is to assign the result of the computation to a construct that requires the value to be known at compile-time, such as an enum or a template parameter. For example:

int complicatedComputation(int x, int y)

{

return ...; /* insert complicated computation here */

}

void main()

{

// The compiler may choose to evaluate this at compile-time or not,

// depending on how complex the computation is.

int i = complicatedComputation(123, 456);

// Since the value of an enum must be known at compile-time, the

// compiler has no choice but to evaluate it in CTFE. This is the

// standard idiom for forcing CTFE evaluation of an expression.

enum j = complicatedComputation(123, 456);

}

In discussions among D users, when CTFE is mentioned it is usually in this context, where CTFE evaluation is forced because the value of an expression must be known at compile-time.

A different "compile-time"

Coming back to the topic at hand, though, notice that when we speak of CTFE, we speak of "virtual machines" and "bytecode interpreters". This implies that by this point, the code has gone far enough through the compilation process that it is essentially ready to be turned into runtime executable code.

In particular, this means that it has long passed the AST manipulation stage. Which in turn implies that code that can be evaluated by CTFE can no longer make use of AST manipulation constructs like static if. In order for CTFE to work, semantic notions such as variables and memory must have been assigned to various constructs in the code, otherwise there is nothing to execute or interpret. But in the AST manipulation phase, such semantics have not yet been assigned -- we're still manipulating the structure of the program.

Thus, even though CTFE happens at "compile-time" just as AST manipulation happens at "compile-time", this is actually a different "compile-time". It is much closer to "runtime" than AST manipulation, which represents a much earlier stage in the compilation process. This is why the terminology "compile-time" is confusing: it gives the false impression that all of these features, AST manipulation and CTFE alike, are lumped together into a single, amorphous blob of time labelled "compile-time", and that the compiler can somehow make it all magically just work, by fiat.

The point to take away from all this, is that AST manipulation constructs are applied first, and then the code may be used in CTFE later:

- AST manipulation → CTFE

The unidirectional arrow indicates that a piece of code can only move from AST manipulation to CTFE, but never the other way round.

Of course, in practice, this simple picture is only part of the story. To understand how it all works, it's best to look at actual code examples. So let's now take a look at a few common pitfalls that D learners often run into, and see how this principle applies in practice.

Case Study: Reading CTFE variables at AST manipulation time

A rather common complaint that's brought up in the D forums from time to time pertains to code along these lines:

int ctfeFunc(bool b)

{